Welcome back to the Kubernetes Homelab Series! After building a strong foundation in Part 1 with a two-node Kubernetes cluster, it’s time to take our homelab to the next level by addressing one of the most critical needs in any Kubernetes environment: persistent storage.

In this post, we’ll explore how to combine Longhorn and MinIO to create a robust storage solution:

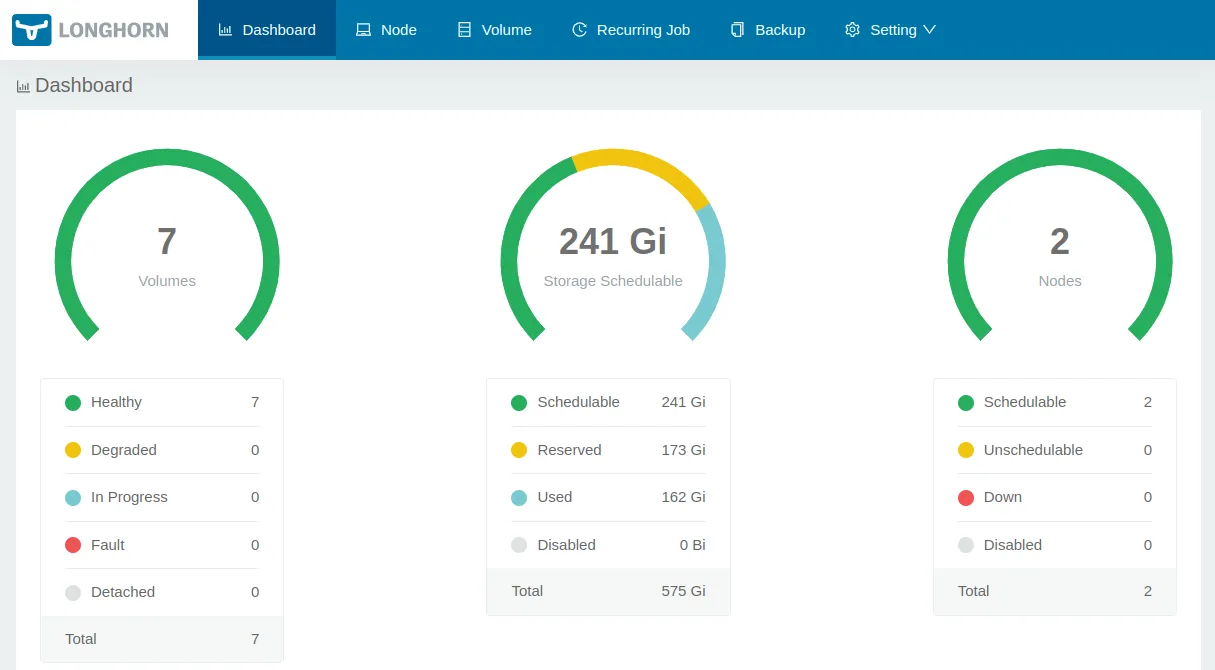

- Longhorn: Distributed block storage designed for Kubernetes, providing resilient and automated backups.

- MinIO: S3-compatible object storage, ideal for leveraging your NAS as a backup target.

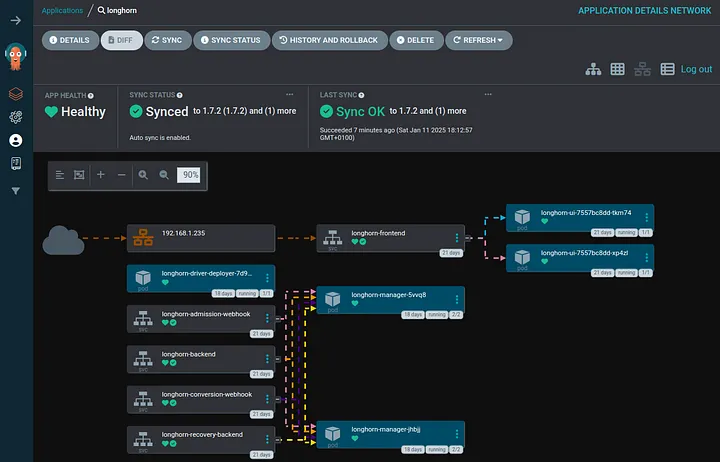

- GitOps with ArgoCD: Ensures all configurations are managed declaratively, making your setup reliable and version-controlled.

By the end of this guide, you’ll have a scalable and resilient storage solution that is perfect for modern applications and homelabs alike.

Why Persistent Storage Matters

Imagine your Kubernetes cluster running perfectly until a pod restarts or a node reboots and you lose important data. Persistent storage ensures that your data remains intact, even during these disruptions. With Longhorn and MinIO, you’re not just ensuring data persistence; you’re adding resilience, scalability, and disaster recovery capabilities to your homelab.

What is Longhorn?

Longhorn is an open-source distributed block storage system designed specifically for Kubernetes. It simplifies persistent storage management and provides features like automated volume provisioning, snapshots, and backups.

Key Features of Longhorn:

- Built for Kubernetes: Fully integrated with Kubernetes APIs.

- Distributed Storage: Data is replicated across nodes, ensuring resilience against node failures.

- Snapshots and Backups: Includes automated options for easy management.

- Lightweight: Designed to work efficiently, even in homelabs with limited resources.

Part 1: Deploying Longhorn with GitOps

Why GitOps?

Managing Kubernetes clusters can become overwhelming. GitOps transforms this complexity into simplicity by using Git as the single source of truth. This approach ensures consistency, automation, and version control for all your configurations.

There are several tools to implement GitOps, such as Flux and ArgoCD. For my homelab, I chose ArgoCD because of its intuitive interface and seamless integration with Kubernetes.

Key features of ArgoCD:

- Automated Sync: Keeps your cluster in sync with Git automatically or on-demand.

- Declarative Management: Ensures your cluster’s state always matches what’s defined in Git.

- User-Friendly UI: A clean interface for managing applications and troubleshooting issues.

- Multi-Cluster Support: Easily manages multiple clusters from one place.

Let’s start by setting up ArgoCD to manage our cluster.

Step 1: Install ArgoCD

First, add the ArgoCD Helm repository and create a namespace:

helm repo add argo https://argoproj.github.io/argo-helm

helm repo update

kubectl create namespace argocdNext, install ArgoCD using Helm:

helm install argocd argo/argo-cd - n argocdTo access the ArgoCD UI:

kubectl port-forward svc/argocd-server -n argocd 8080:443Navigate to https://localhost:8080 and retrieve the initial admin password:

kubectl -n argocd get secret argocd-initial-admin-secret -o jsonpath="{.data.password}" | base64 -dYou’re now ready to use ArgoCD!

Step 2: Deploy Longhorn

To install Longhorn, start by adding its Helm repository:

helm repo add longhorn https://charts.longhorn.io

helm repo updateCustom Configuration

Create a custom-values.yaml file to store Longhorn configurations in your Git repository. Here’s an example:

persistence:

defaultClass: true

defaultSettings:

backupTarget: "s3://k8s-backups@us-east-1/"

backupTargetCredentialSecret: "minio-credentials"

service:

ui:

type: LoadBalancer

port: 80Adding a dummy AWS region (e.g., us-east-1) in the backupTarget configuration is necessary because many S3-compatible systems, including MinIO, emulate the Amazon S3 API. The AWS region plays a role in how clients interpret and validate the S3 endpoint.

MinIO Credentials Secret

Define the MinIO credentials in a Kubernetes secret:

apiVersion: v1

kind: Secret

metadata:

name: minio-credentials

namespace: longhorn-system

type: Opaque

data:

AWS_ACCESS_KEY_ID: <base64-encoded-access-key>

AWS_SECRET_ACCESS_KEY: <base64-encoded-secret-key>

AWS_ENDPOINTS: <base64-encoded-endpoint> Apply the secret:

kubectl apply -f minio-credentials.yamlPush the changes to your Git repository:

git add apps/longhorn/

git commit -m "Add custom values for Longhorn"

git pushDeploy Longhorn with ArgoCD

Create an ArgoCD application manifest for Longhorn:

apiVersion: argoproj.io/v1alpha1

kind: Application

metadata:

name: longhorn

namespace: argocd

spec:

project: default

sources:

- repoURL: 'https://charts.longhorn.io/'

chart: longhorn

targetRevision: 1.7.2

helm:

valueFiles:

- $values/apps/longhorn/custom-values.yaml

- repoURL: 'https://github.com/pablodelarco/kubernetes-homelab'

targetRevision: main

ref: values

destination:

server: 'https://kubernetes.default.svc'

namespace: longhorn-system

syncPolicy:

automated:

prune: true

selfHeal: true

syncOptions:

- CreateNamespace=trueSave this file as longhorn.yaml and apply it:

kubectl apply -f longhorn.yamlLeveraging multiple sources in ArgoCD adds flexibility by integrating the latest Helm charts with custom configurations, enabling seamless updates while maintaining a scalable and adaptable setup.

Part 2: Deploying MinIO

What is MinIO?

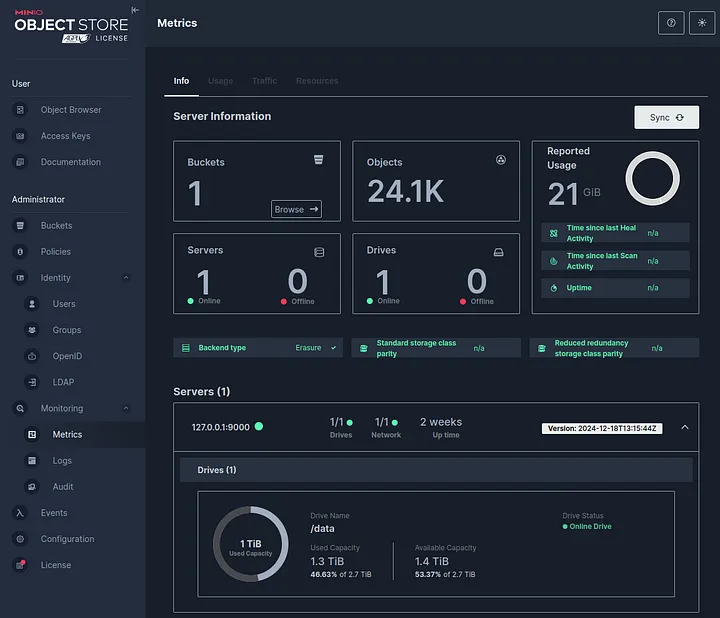

When it comes to object storage in Kubernetes, MinIO is a go-to solution. It’s open-source, high-performance, and S3-compatible — making it an ideal choice for both homelabs and production environments.

Key Features:

- S3 API Compatibility: Works seamlessly with S3-compatible tools and applications.

- Backup Storage: Acts as a robust and scalable target for Longhorn backups or other Kubernetes workloads.

- Homelab-Friendly: Easily integrates with existing NAS infrastructure via NFS shares, leveraging the resources you already have.

Step 1: Configure NFS Storage for MinIO

To use MinIO with NFS for storing backups, we’ll define a StorageClass:

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: nfs

provisioner: nfs

parameters:

server: 192.168.1.42 # Replace with your NAS IP

path: /volume1/minio-backup # Path to the NFS folder

reclaimPolicy: Retain

volumeBindingMode: ImmediateWhy Use a StorageClass?

- A

StorageClassenables dynamic provisioning of PersistentVolumes (PVs). Kubernetes handles the creation of PVs and binding them to PersistentVolumeClaims (PVCs) without requiring manual setup. - The

ReclaimPolicy: Retainensures your backup data isn’t deleted when PVCs are removed, offering an extra layer of safety.

Apply the StorageClass: kubectl apply -f nfs-storageclass.yaml.

Step 2: Configure MinIO Custom Values

Save the following as custom-values.yaml in your repository:

nameOverride: "minio"

fullnameOverride: "minio"

clusterDomain: cluster.local

image:

repository: quay.io/minio/minio

tag: RELEASE.2024-12-18T13-15-44Z

pullPolicy: IfNotPresent

mcImage:

repository: quay.io/minio/mc

tag: RELEASE.2024-11-21T17-21-54Z

pullPolicy: IfNotPresent

mode: standalone

rootUser: ""

rootPassword: ""

existingSecret: minio-login

persistence:

enabled: true

storageClass: nfs

accessMode: ReadWriteMany

size: 150Gi

annotations: {}

service:

type: LoadBalancer

port: "9000"

nodePort: 32000

annotations: {}

loadBalancerSourceRanges: {}

consoleService:

type: LoadBalancer

port: "9001"

nodePort: 32001

annotations: {}

loadBalancerSourceRanges: {}

securityContext:

enabled: true

runAsUser: 65534

runAsGroup: 100

fsGroup: 100

fsGroupChangePolicy: "OnRootMismatch"

metrics:

serviceMonitor:

enabled: true

resources:

requests:

memory: 2Gi

cpu: 500m

limits:

memory: 4Gi

cpu: 1

customCommands: []Deployment Challenge: MinIO Permission Issues

While deploying MinIO with an NFS-backed storage, I encountered a BackOff error due to a mismatch between MinIO's securityContext settings and the ownership of the NFS-mounted directory.

Solution: Updating the securityContext to use runAsUser: 65534, runAsGroup: 100, and fsGroup: 100 resolved the issue, ensuring proper alignment with the NFS storage permissions.

This issue highlighted the importance of configuring storage permissions correctly when working with Kubernetes.

Step 3: Deploy MinIO with ArgoCD

Add the MinIO Helm repository:

helm repo add minio https://charts.min.io/

helm repo updateDefine the ArgoCD application for MinIO:

apiVersion: argoproj.io/v1alpha1

kind: Application

metadata:

name: minio

namespace: argocd

spec:

project: default

sources:

- repoURL: 'https://charts.min.io/'

chart: minio

targetRevision: 5.4.0

helm:

valueFiles:

- $values/apps/minio/custom-values.yaml

- repoURL: 'https://github.com/pablodelarco/kubernetes-homelab'

targetRevision: main

ref: values

destination:

server: 'https://kubernetes.default.svc'

namespace: minio

syncPolicy:

automated:

prune: true

selfHeal: true

syncOptions:

- CreateNamespace=truePush the configuration to your Git repository, then sync the application in ArgoCD. Verify the deployment with:

kubectl get all -n minioIn case there is any problem, you can check the logs of the ArgoCD application by executing: kubectl describe application minio -n argocd

Step 4: Using MinIO for Longhorn Backups

We’ve already declared the backup target and credential secret in the custom-values.yaml. However, if you prefer to configure this manually, follow these steps:

- Open the Longhorn UI and navigate to Settings > General.

- Enter the following details:

- Backup Target:

s3://k8s-backups - Backup Target Credential Secret:

minio-credentials

Conclusion

With Longhorn and MinIO, your Kubernetes homelab now features resilient, scalable storage for backups and object storage. This setup ensures your data is secure, accessible, and disaster-ready, all managed declaratively with GitOps.

In the next post, we’ll enhance our homelab with Prometheus and Grafana for observability and monitoring. See you in Part 3! 🚀

If you’d like to connect or discuss more, feel free to follow me on LinkedIn!